Conventional machine learning algorithms require a lot of labeled data for supervised learning. In the absence of such data, the models suffer from performance degradation. Transfer learning enables the prior knowledge gained in doing a particular task to be reused or transferred to another new task of a similar nature. This can speed up and improve the learning curve of the tasks in the new domain.

A quick primer

Imagine we're learning to ride a bicycle. Initially, we struggle with balance and coordination, but once we master it, those skills can be surprisingly useful when learning to ride a scooter. Transfer learning in AI is somewhat analogous; it's the art of leveraging knowledge gained from one task to improve performance on another.

The Mechanisms

Transfer learning operates on the premise that models can utilize their understanding of one problem to tackle a related, yet distinct, problem more efficiently. Key mechanisms:

Feature Extraction:

At the heart of transfer learning lies the concept of feature extraction. Consider a neural network designed to recognize objects in images (say, cats and dogs). The early layers of this network learn basic features like edges, textures, and patterns. In transfer learning, we can take these learned features and apply them to a new task, such as distinguishing between different breeds of dogs.

Insight - A neural network can be mathematically represented as a series of interconnected layers. During training, weights are adjusted to minimize the difference between predicted and actual outcomes. In transfer learning, we freeze the early layers (feature extractors) to preserve learned features, while only adjusting the later layers for the new task.

Fine-Tuning:

Fine-tuning is the process of adapting a pre-trained model to a new task by adjusting its parameters. This allows the model to refine its understanding of the specific nuances associated with the new problem. Going back to our bicycle riding analogy, it's like tweaking our riding technique to better suit the characteristics of a scooter.

Insight - Fine-tuning involves updating the model's weights using a smaller learning rate. This ensures that the previously acquired knowledge isn't overwritten too quickly, allowing the model to retain its general understanding while adapting to the specifics of the new task.

Example

Consider a real-world example: Image Classification.

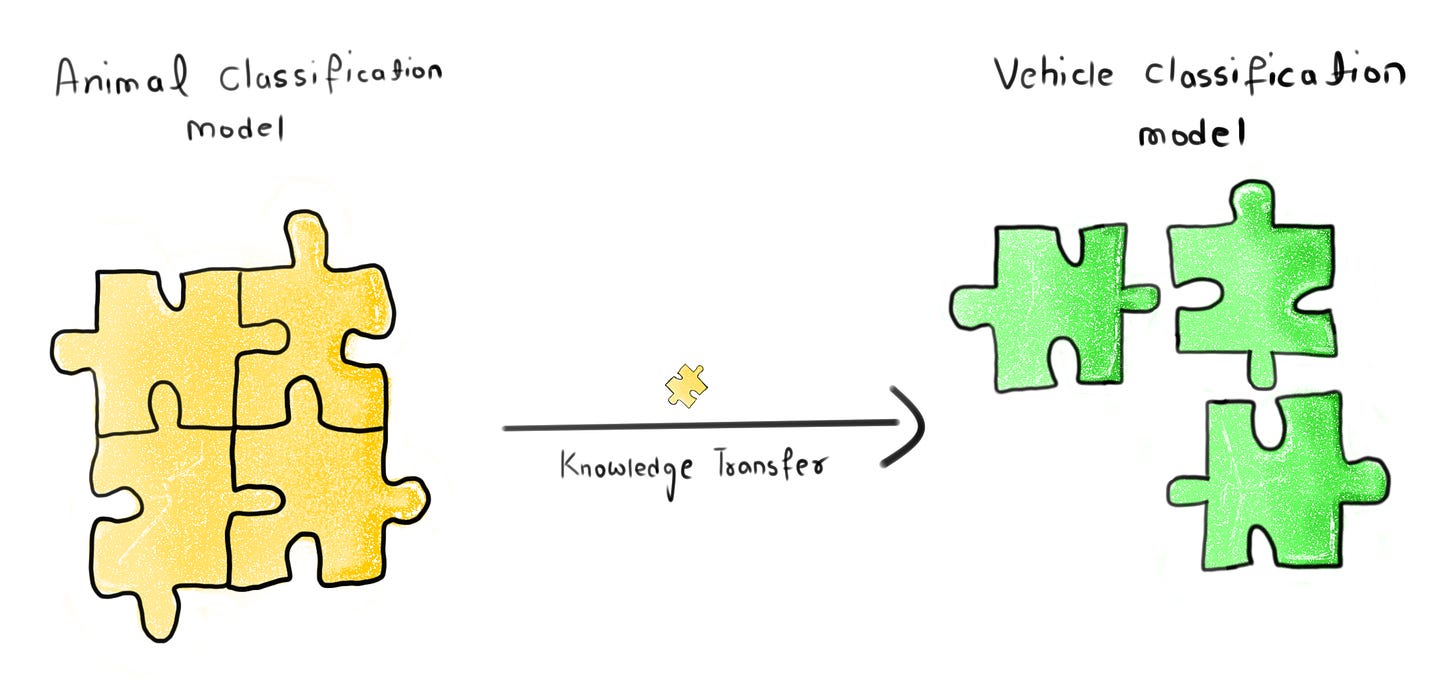

Base Task: We train a model to recognize various animals in images.

Transfer Task: Leveraging the learned features, we transfer the model to a new task, such as classifying different types of vehicles.

Conclusion

Transfer learning is one of the most useful techniques that can be used to save a lot of resources and time by leveraging the already trained models and fine-tuning them according to our use. It is like using scikit-learn package for a particular algorithm instead of writing one from scratch (We don’t do that).